What Results Measures Do Donors Use to Evaluate Aid Effectiveness?

The Paris Declaration on Aid Effectiveness and the Accra Agenda for Action play an important role in shaping bilateral and multilateral donor organizations’ results measurement priorities. Donors increasingly prioritize approaches to evaluation based on results-based management and value for money. Each donor, however, has its own methods, leading to significant variability in the results measures used to evaluate aid effectiveness.

This is the third in a series of four posts presenting evidence and trends from the Evans School Policy Analysis and Research (EPAR) Group’s report on donor-level results measurement systems. The first post in the series provides an introduction to donor-level results measurement systems and outlines the theoretical framework, data, and methods for the research. The second post in the series examines the systems and processes donors use for measuring aid effectiveness.

This post focuses on the types of results measures donors use to evaluate their performance. EPAR examined annual reports, evaluation plans and policies, and peer reviews from 22 government results measurement systems, including 12 bilateral organizations and 10 multilateral organizations. Each section of this post assesses donors’ use of one of four types of results measures to evaluate performance: 1) donor and recipient alignment, 2) outputs and implementation, 3) outcomes and impact, and 4) costs and cost-effectiveness.

Donor and Recipient Alignment

As a result of international agreements on how to support aid effectiveness, indicators of coordination and alignment between donors and recipients have gained importance as donor performance measures. Donor-recipient alignment refers to using in-country systems for financial management and monitoring and evaluation (M&E) and supporting recipient-country development agendas. Lack of alignment can overburden in-country systems with multiple reporting requirements and decrease aid effectiveness. EPAR explores aid alignment from the country-recipient perspective in a prior post.

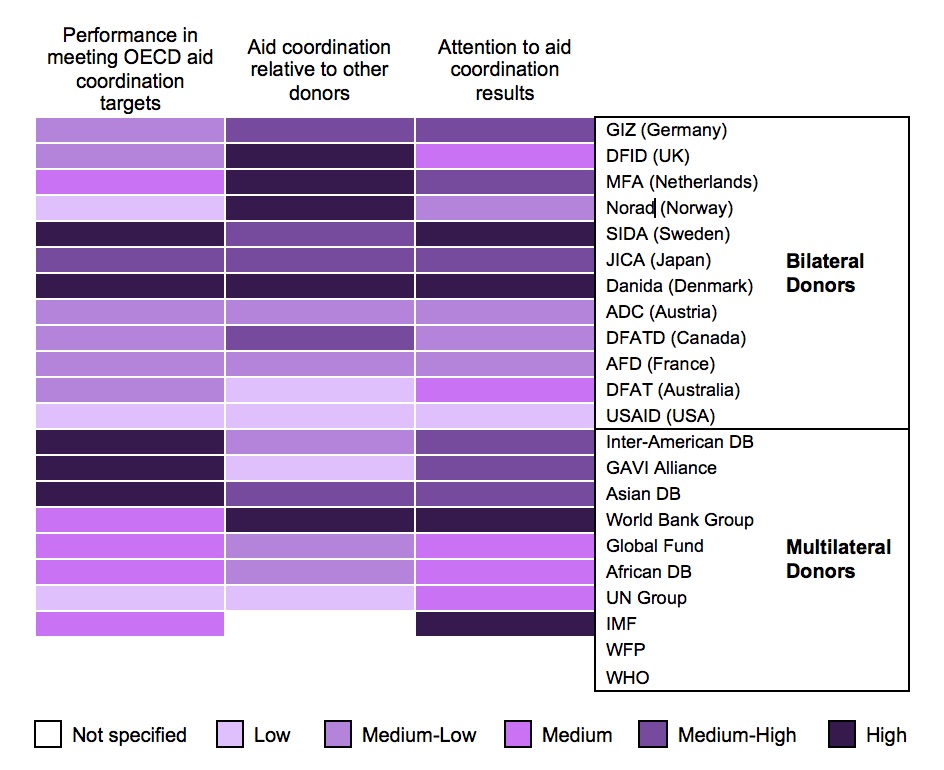

EPAR assigns donors a rating from low to high based on donors’ overall performance in meeting OECD coordination targets for six indicators: strengthening capacity by coordinated support, using recipient-country public financial management systems, avoiding parallel implementation structures, maintaining untied aid, conducting joint recipient-country missions, and ensuring joint analytic work (Figure 1, column 1). Two donors are rated “high”, indicating that at least five of six coordination and alignment targets were met. Five donors are rated “medium-high” for three or four targets met, nine donors are rated “medium-low” for two targets met, and three donors are rated “low” for one or fewer targets met.

We also consider a composite “overall aid effectiveness index” that ranks most donors based on their performance on the 2008 Survey on Monitoring the Paris Declaration (column 2). A donor’s overall rating on this index is the average of their rating for each indicator of aid effectiveness. An equal number of donors (five) are rated “high”, “medium-high”, and “medium-low”. Four donors are rated “low”.

Ratings for meeting targets and for relative performance in aid coordination are combined with qualitative information from donor reports and peer reviews to assign an overall rating of donors’ level of attention to coordination (column 3). While all donors have a stated aim of increasing aid effectiveness in terms of the indicators laid out by the Paris Declaration, donors with higher ratings place greater emphasis on coordination in measuring their aid performance. Three donors are rated “high”, including Danida (Denmark) which, in its [2011 results framework](https://www.oecd.org/dac/peer-reviews/2011 – DanidasFrameworkforManagingforDevelopmentResults20112014Final.pdf), stresses that partner systems should generate results rather than donors. Fifteen donors are rated either “medium-high”, “medium”, or “medium-low”. For example, a [2013 OECD peer review](https://www.oecd.org/dac/peer-reviews/OECD Australia FinalONLINE.pdf) finds that DFAT (Australia) has made good progress with delivering aid, but there is still room for improvement. One donor, USAID (United States), is rated “low” for attention to coordination.

Donors report three broad challenges for coordinating results measurement with aid-recipients. The first is improving coordination through additional actions, such as reducing bureaucracy and supporting decentralization. For example, a Multilateral Aid Review of the Inter-American Development Bank (IDB) states “some partners are concerned about bureaucracy and limited decentralization”. Second is coordinating results measurement with diverse stakeholders. A 2014 review of JICA suggests Japan’s aid policies could more representatively reflect the diversity of recipient countries. The third challenge is coordinating joint evaluations with partners. A 2013 evaluation of USAID evaluations finds that 65 percent of evaluations teams lacked members from local populations.

Outputs and Implementation

Donors often assess performance by looking at outputs, the direct results of a project or program’s activities. Examples of indicators for measuring outputs include: number of insecticide-treated bed nets delivered, number of teachers trained, and kilometers of roads or railways constructed.

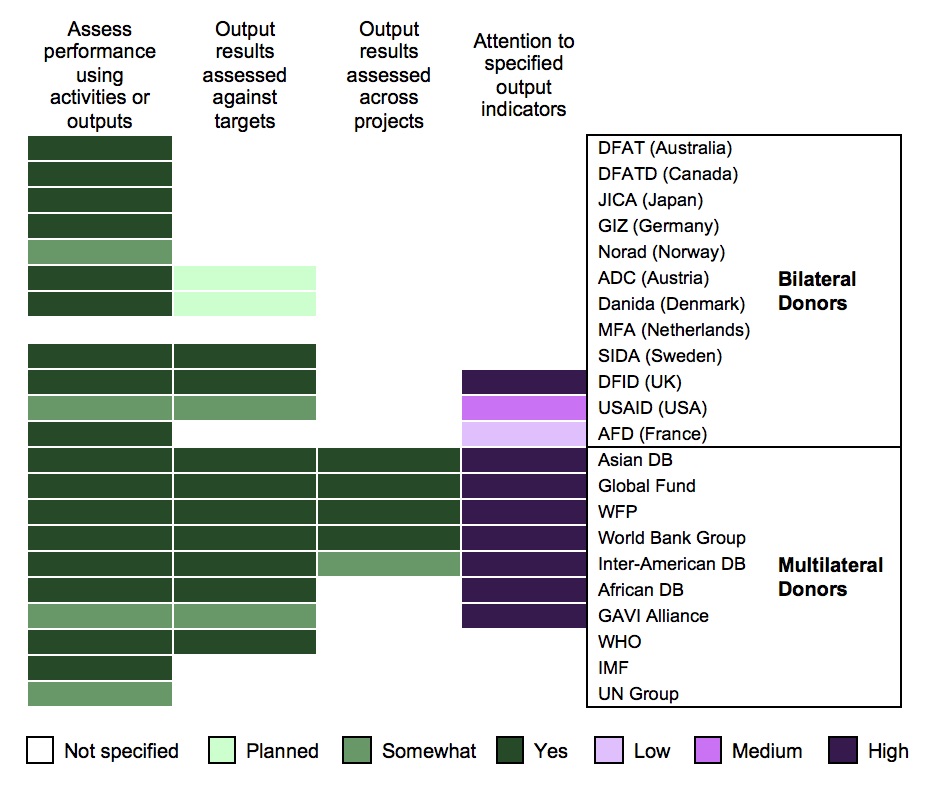

We find that nine of 12 bilateral donors and eight of ten multilateral donors assess performance in terms of outputs from their projects (Figure 2, column 1). Two multilateral donors and two bilateral donors “somewhat” assess performance by outputs. In all four of these cases, external reviewers find that reporting on outputs occurs for some projects, but is not comprehensive. For one donor, documents included in our review do not provide evidence on assessing performance in terms of outputs.

Donors use several methods to assess performance with output data. A primary method is to set targets for output indicators at the project level and/or organizational level, and assess whether these output targets (column 2) were met. Two bilateral donors, SIDA (Sweden) and DFID (UK), consistently assess performance in this way. Another bilateral donor, USAID, sets targets for output indicators, but there are criticisms of the comprehensiveness of its overall results management system due to its focus on accountability of funds over outputs, outcomes, or impacts. Multilateral donors appear to more consistently assess performance in terms of meeting output targets (seven of ten) than bilateral donors. For example, the Asian Development Bank sets output targets at the aggregated project-level (number of beneficiaries reached) and at the organizational level (number of projects completed and rated “satisfactory”).

Output data can also be used for comparing results across projects (column 3). Donors can compare projects in terms of outputs achieved by using comparable indicators and results measurement systems. None of the bilateral and four of ten multilateral donors have processes for comparing output results across projects. An external review of the World Bank Group found that the organization’s Independent Evaluation Group uses standardized indicators and reporting forms to compare results across projects.

Some donors specify an organization-wide list of output indicators in order to standardize indicators across projects. Of the 12 bilateral donors, there was insufficient information assess nine. AFD (France) rated “low” with little evidence that output indicators are used consistently across projects, USAID rated “medium” with some evidence to suggest collection of specified output indicators, and DFID rated “high” with strong evidence of collecting and aggregating standardized output indicators. In contrast, seven of ten multilateral donors are rated “high”. For example, the World Food Program both aggregates and reports numbers of beneficiaries and amount of food distributed across projects.

Two of 12 bilateral donors and three of 10 multilateral donors identify challenges in collecting information about outputs. Poor data quality is a common challenge, with DFID’s 2011 Multilateral Aid Review finding that data quality is often poor due to an inadequate results framework.

Outcomes and Impact

“Outcomes” are desired results caused by outputs. For example, delivery of vaccines, an output, may cause changes in the recipient-country’s child mortality rate, a desired outcome. Unlike output results, to assess performance by an outcome measure donors must attribute those outcomes to program activities. Some donors achieve this through experimental (randomized) or quasi-experimental methods by comparing results in control groups (those not exposed to the activity or intervention) to treatment groups (those who are recipients, but otherwise resemble the control group) while others just assume that changes in outcome measures in a program area are at least partly due to the program.

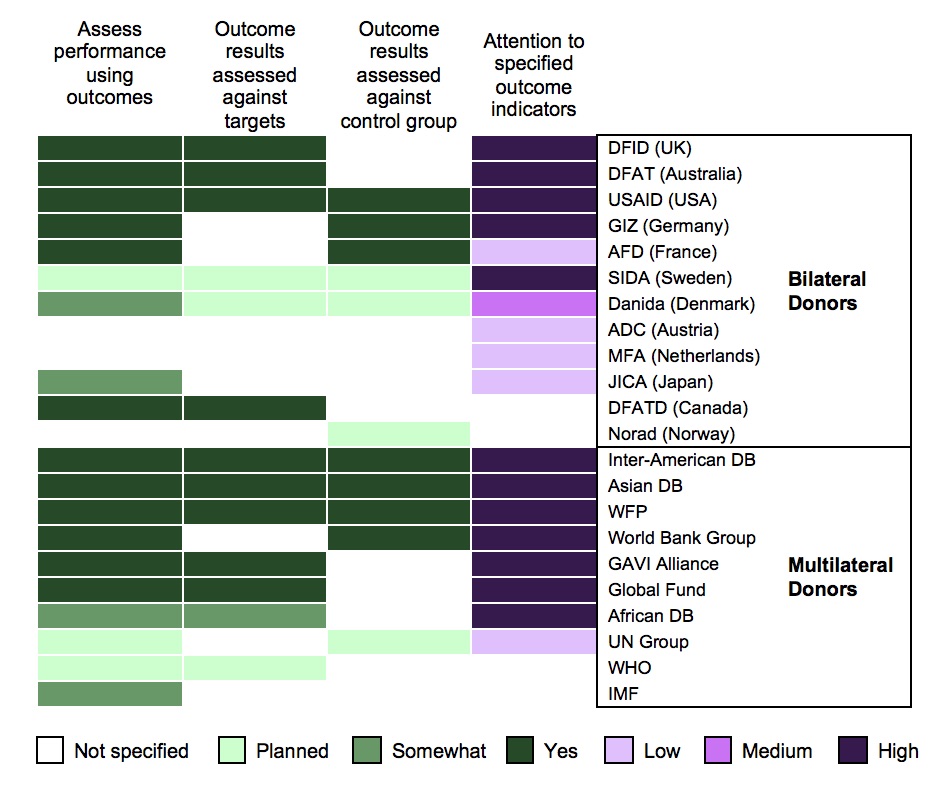

Half or more of bilateral donors (six of 12) and multilateral donors (six of ten) assess performance in terms of outcomes resulting from their projects (Figure 3, column 1). Two bilateral and two multilateral donors “somewhat” assess performance in terms of outcomes.

Similar to output-level data, donors assess outcome-level data by setting targets and then assessing performance against those targets (column 2). We find that four of 12 bilateral donors and five of ten multilateral donors consistently assess performance in terms of meeting outcome-level targets. For example, the IDB’s evaluations assess changes in outcomes against targets, and assess how attributable those changes in outcomes are to the IDB’s programs.

Donors may also use control groups to assess outcome-level results (column 3). Comparing changes in control groups relative to treatment groups provides additional confidence in attributing treatment group changes to the donors’ programs. Four multilateral and three bilateral donors specify using control groups. The Asian Development Bank has 24 ongoing impact evaluations as of 2013, and the World Bank Group has 81 percent of ongoing evaluations using experimental methods.

Using the same ratings scale for outcomes as for the assessment of outputs (column 4), EPAR finds five of 12 bilateral donors receive a “high” rating, one receives a “medium” rating, four receive a “low” rating, and two are “not specified”. DFID, a “high” rated donor, tracks outcomes related to the MDGs, and reports figures for these outcome indicators in its annual report. Similarly, Danida, a “medium” rated donor, tracks MDG-related outcomes, but does so inconsistently. For the 10 multilateral donors, seven are rated “high”, one is rated “low”, and two are “not specified”. The UN Group is rated “low” because while it specifies that MDGs are a priority across the UN system, no evidence suggests MDG-based outcome data are collected system-wide.

Issues with the quality of baseline data and an organizational focus on outputs or processes over outcomes are the most commonly identified challenges for measuring outcome-level results. For instance, Norad’s evaluations are criticized for not mentioning outcome-level results at all. Even when results frameworks are adequate, some donors found difficulties in attributing changes in outcomes to donor programs and in measuring outcomes for certain sectors.

Costs and Cost-Effectiveness

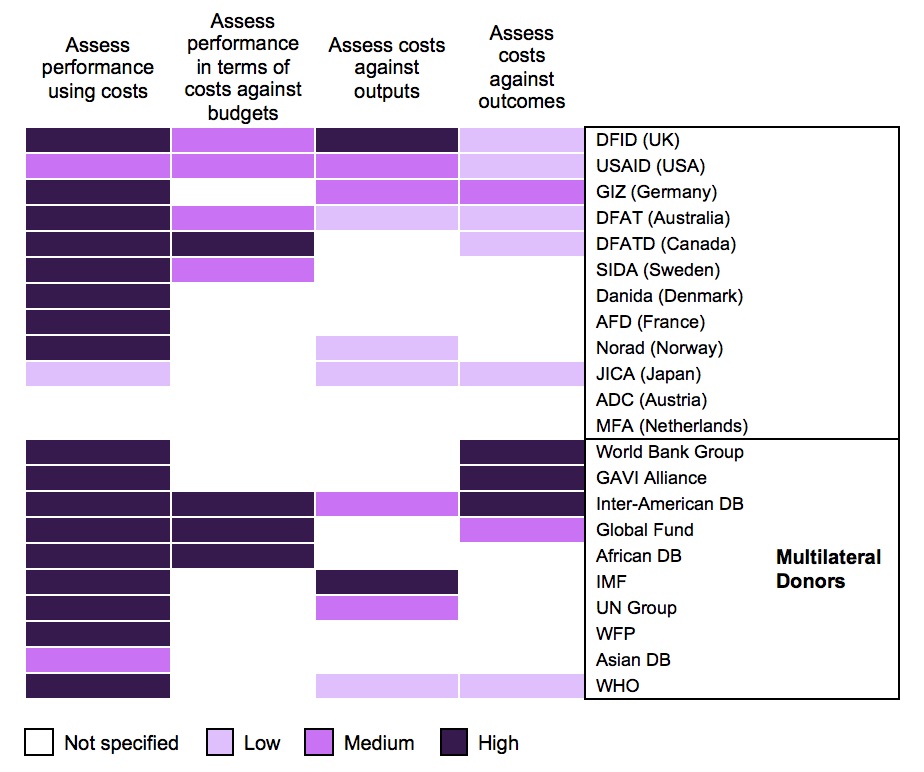

Donors measure and report costs with varying levels of attention and analysis. EPAR reports on four categories of performance assessment related to cost (Figure 4): reporting costs/spending, and comparing costs against budgets, outputs, and outcomes. While reporting costs and spending on projects can improve aid transparency, assessing cost-effectiveness of aid flows generally requires a “per unit” analysis of costs; for example, comparing costs to outputs or outcomes, or between projects and programs.

Seventeen donors rate as “high” and two rate as “medium” for assessing performance in terms of spending on donor-funded projects (column 1). Donors with “high” ratings explicitly report using costs to assess performance, while those with “medium” ratings report costs but do not specify how costs are used to measure performance. In general, donors measure and report costs in terms of allocations to different projects, countries, or sectors, or as reports on total expenditures. For example, annual reports for DFAT include the amount of funding allocated to different programs.

Fewer donors (eight of 22) receive “high” or “medium” ratings for comparing costs or expenditures against budgets to assess implementation performance (column 2). While some donors simply list budget amounts next to expenditures, others assess the proportion of project spending that was in line with budgets. This type of results measurement is primarily used to evaluate an organization’s implementation performance and support accountability of the organization to their stakeholders. The Canadian DFATD’s 2013-14 Departmental Performance Report breaks down planned and actual spending by program and sub-program and annotates reasons for discrepancies between planned and actual spending.

In addition to comparing costs to budgets, donors may assess implementation performance by comparing costs against outputs achieved (column 3). Programs which produce more outputs compared to the amount spent are considered to be more cost-effective. Three agencies are rated “high” for measuring costs against outputs. DFID’s Annual Report in 2014 includes indicators such as “cost per child supported in primary education” and “average unit price of long-lasting insecticide treated per bed-net procured”. Three donors are rated “medium” and four are rated “low”.

To further assess the cost-effectiveness of programs, certain donors compare costs to realized outcomes (column 4). Programs that achieve desired outcomes at a lower cost are considered more cost-effective. Only three donors are rated “high”, with GAVI Alliance one of the few agencies to conduct in-depth analyses in a small number of countries to assess the impact and cost-effectiveness of introducing and scaling up coverage of new vaccines. Two donors receive “medium” ratings, and six donors receive “low” ratings. Half of all donors (11 of 22) do not specify whether they measure cost-effectiveness. For some donors rated as “not specified”, indicators measuring cost-effectiveness may exist (e.g., for individual projects) but are not reported on in the reviewed documents. An evaluation of the World Bank Group’s impact evaluations, for instance, indicates that cost-effectiveness was given less attention because projects were often funded by multiple sources, making it difficult to account for all project costs.

EPAR finds that most donor organizations measure and report results using some mixture of outputs, outcomes, and costs and describe taking action towards improving their alignment and harmonization with country-level systems. Many donors, however, report challenges with aggregating results at the organization level, especially for more complex measures such as outcomes and cost-effectiveness. We find that few donors compare output results across projects, have clear methods for evaluating impacts of aid flows, or use spending measures in combination with output or outcome data to measure cost-effectiveness. A recent study finds that current aggregate results measures reported by donor agencies are not sufficient for evaluating aid effectiveness or for donor accountability, and highlights several challenges and potential adverse effects of measuring aggregate organization-level results. The author argues that donors should invest in independent and rigorous evaluations and should also report results of individual interventions, to supplement aggregate results measures and increase aid transparency.

References to evidence linked in this post are included in EPAR’s full report.

Share This Post

Related from our library

-1000x750.png)

The Results Data Initiative has Ended, but We’re still Learning from It

If an organization with an existing culture of learning and adaptation gets lucky, and an innovative funding opportunity appears, the result can be a perfect storm for changing everything. The Results Data Initiative was that perfect storm for DG. RDI confirmed that simply building technology and supplying data is not enough to ensure data is actually used. It also allowed us to test our assumptions and develop new solutions, methodologies & approaches to more effectively implement our work.

-1000x750.jpg)

Catalyzing Use of Gender Data

From our experience understanding data use, the primary obstacle to measuring and organizational learning from feminist outcomes is that development actors do not always capture gender data systematically. What can be done to change that?

Sharing DG’s Strategic Vision

Development Gateway’s mission is to support the use of data, technology, and evidence to create more effective and responsive institutions. We envision a world where institutions listen and respond to the needs of their constituents; are accountable; and are efficient in targeting and delivering services that improve lives. Since late 2018, we’ve been operating under